How To Get A Better Disk Performance In Docker For Mac

- Be sure the 'Disk image location' value ends with the file type 'Docker.raw'. The new raw filesystem ensures you are using the latest image format for performance. Hope you experience much better performance as Docker for Mac continues to get better each day. Submitted by Mark Shust on Tue, - 16:50. Submitted by btamm.

- Apr 11, 2016 After upgrading to beta6, this issue persists. My test suite for one of my microservices takes 100 seconds to execute under docker-machine on VirtualBox, and 238 seconds under Docker for Mac Beta. Underlying cause still seems to be CPU-bound disk access in the latter. The former does not experience this issue.

The default Docker for Mac file performance can be bad. Here are some small tips to improve disk I/O for Docker for Mac. Follow these small steps to get a better disk performance in Docker for Mac.

Latest Docker variations (17.04 CE Advantage onwards) that make it feasible to identify the consistency specifications for a bind-mounted website directory. The flags are.

consistent: Complete regularity. The box runtime and the sponsor preserve an similar see of the mount at all periods. This is definitely the default. cached: The host's look at of the position is respected. There may be delays before up-dates made on the sponsor are visible within a box. delegated: The box runtime's watch of the position is authoritative. There may become delays before updates made in a box are visible on the web host.

Libsvm documentation. Main features of LIBSVM include • Different SVM formulations • Efficient multi-class classification • Cross validation for model selection • Probability estimates • Various kernels (including precomputed kernel matrix) • Weighted SVM for unbalanced data • Both C++ and sources • demonstrating SVM classification and regression •,,,,,,,,,,, and interfaces. Code and extension is available.

How To Know The Disk Performance In Raids

In 17.04 cached will be considerably faster than consistent for numerous workloads. However, delegated currently behaves in the same way to cached. Thé Docker for Mac pc team plans to launch an improved implementation of delegated in the potential future, to rate up write-héavy workloads. We furthermore plan to further enhance the performance óf cached and consistent. We will write-up updates pertaining to Docker for Macintosh file revealing performance in comments to this problem. Users serious in information about performance improvements should subscribe right here. To maintain the signal-to-noise proportion high we will definitely moderate this problem, eliminating off-topic ads, etc.

Questions about the execution, the habits, and the style are extremely welcome, as are realistic standards and real-world use cases. A basic real-worked instance (Drupal 8): Docker for Mac pc: Edition 17.05.0-ce-rc1-mac8 (16582) Mac-mini:: MacOS Sierra: 10.12.2 (16C67) A easy command line curl check (taken average of 10 calls to URL) Drupal 8 clear install frontend: older UNISON (custom synced container strategy) Quantity mount: 0.470s regular Volume position: 1.401s new:cached Quantity build: 0.490 v.easy execution to add to my compose.yaml documents and joyful with any hold off between web host/output making use of cached on host codebase. Will be there a tough/expected discharge time for 17.04 (stable)? A rudimentary real-worked instance (Drupal 8): Docker for Mac: Version 17.05.0-ce-rc1-mac8 (16582) Mac-mini:: MacOS Sierra: 10.12.2 (16C67) A easy command line curl test (taken average of 10 calls to Link) Drupal 8 clear install frontend: old UNISON (custom made synced box strategy) Quantity support: 0.470s regular Volume support: 1.401s brand-new:cached Volume support: 0.490 v.simple implementation to include to my compose.yaml files and joyful with any delay between web host/output using cached on web host codebase. Is certainly there a rough/expected discharge day for 17.04 (stable)? Yes, I could do that.

I'n have got to do that for éach of the 12 docker-compose tasks. And I'd have got to keep it in sync with modifications to the 'get good at' docker-compose.ymI. As an intérmediary gauge: fine. Long expression: simply no, as it can be not really don't-répeat-yourself:-) If somebody wants to allow the 'cached' conduct, that person probably wants to make use of it for all/many of the dockérs. Would it create sense as a config setting in the Dockér app itself?

ln the preferences' 'Document posting' tabs? (This should probably end up being its very own ticket, I presume?).

Yes, I could do that. I'deb possess to perform that for éach of the 12 docker-compose tasks. And I'd possess to keep it in sync with changes to the 'get good at' docker-compose.ymI. As an intérmediary gauge: good. Long term: no, as it is usually not quite don't-répeat-yourself:-) If somebody wants to allow the 'cached' behaviour, that individual probably desires to make use of it for all/most of the dockérs. Would it make feeling as a config environment in the Dockér app itself?

ln the choices' 'File revealing' tab? (This should most likely be its own solution, I believe?). The issue text states: delegated: The pot runtime's look at of the support is authoritative. There may be delays before up-dates produced in a box are visible on the sponsor.

Does this mean that if I had been to use delegated (or cachéd for that issue) that syncing would purely be a one-way matter? In other phrases, to create my issue clearer, allow's state I have a codebase in a index and I attach this listing inside a pot making use of delegated. Will this imply that if I revise the codebase on the sponsor, the container will overwrite my adjustments? What I realized until now was, that using for instance delegated, would keep syncing 'two-way', but make it more efficient from the container to the host, but the text message leads me to think that I may have got misunderstood, therefore the issue. The problem text states: delegated: The pot runtime's see of the position is authoritative. There may end up being delays before updates produced in a container are noticeable on the web host. Will this imply that if I were to make use of delegated (or cachéd for that matter) that syncing would totally end up being a one-way occasion?

In some other terms, to make my issue clearer, allow's say I have got a codebase in a directory website and I attach this directory website inside a box making use of delegated. Will this mean that if I revise the codebase on the host, the box will overwrite my adjustments?

What I understood until today has been, that making use of for example delegated, would maintain syncing 'two-way', but create it even more efficient from the container to the web host, but the text message qualified prospects me to believe that I may possess misunderstood, hence the issue. Just posting another information point:. Drupal codebase quantity with rw,deIegated on Docker Advantage (for today, it't only using the cached choice though): 37 demands/sec.

Drupal codebase volume with rw (and no additional choices): 2 requests/sec Drupal 8 is definitely 18x faster if you're making use of a Docker volume to reveal a web host codebase into a pot, and it's pretty close to indigenous filesystem pérformance. With cached, DrupaI and Symfony growth are no more time insanely unpleasant with Dockér. With delegated, thát's actually more real, as procedures like composer revise (which effects in many writes) will furthermore become orders-of-magnitude faster! Just posting another information point:. Drupal codebase quantity with rw,deIegated on Docker Edge (for today, it's i9000 only using the cached choice though): 37 requests/sec.

Drupal codebase volume with rw (and no extra options): 2 requests/sec Drupal 8 is definitely 18x faster if you're making use of a Docker quantity to share a host codebase into a container, and it's quite close to indigenous filesystem pérformance. With cached, DrupaI and Symfony development are simply no more time insanely unpleasant with Dockér. With delegated, thát's actually more real, as procedures like composer up-date (which effects in numerous writes) will also be orders-of-magnitude faster! I'meters seeing great results for working a collection of Behat assessments on a Drupal 7 web site: On Mac pc Operating-system, without:cached: 10:02 $ docker professional clientsitephp rubbish bin/behat -c assessments/behat.yml -labels=@failing -f improvement. 69 situations (69 handed down) 329 methods (329 transferred) 11m26.20s (70.82Mw) With:cached: 09:55 $ docker exec clientsitephp trash can/behat -c tests/behat.yml -tags=@failing -f improvement. 69 situations (69 handed down) 329 ways (329 transferred) 4m33.77s (63.33Mc) On Travis Cl (without:cached): $ dockér professional clientsitephp trash can/behat -chemical checks/behat.yml -tags=@failing -f progress. 69 scenarios (69 passed) 329 measures (329 approved) 4m7.07s (55.01Mc) On Travis Cl (with:cached): 247.12s$ docker professional cliensitephp trash can/behat -chemical tests/behat.yml -tags=@failing -f improvement.

69 situations (69 transferred) 329 measures (329 exceeded) 4m6.71s (55.01Mc) Nice work, Docker group! I'm seeing good outcomes for working a place of Behat tests on a Drupal 7 web site: On Mac OS, without:cached: 10:02 $ docker professional clientsitephp rubbish bin/behat -c exams/behat.yml -labels=@failing -f progress. 69 scenarios (69 exceeded) 329 tips (329 transferred) 11m26.20s (70.82Mw) With:cached: 09:55 $ docker professional clientsitephp rubbish bin/behat -chemical assessments/behat.yml -tags=@failing -f progress. 69 situations (69 handed) 329 ways (329 passed) 4m33.77s (63.33Mt) On Travis Cl (without:cached): $ dockér exec clientsitephp bin/behat -chemical assessments/behat.yml -labels=@failing -f progress. 69 situations (69 passed) 329 methods (329 transferred) 4m7.07s (55.01Mw) On Travis Cl (with:cached): 247.12s$ docker exec cliensitephp rubbish bin/behat -chemical testing/behat.yml -tags=@failing -f improvement. 69 scenarios (69 approved) 329 actions (329 handed) 4m6.71s (55.01Mm) Nice work, Docker group! Both:cached ánd (when it lands):delegated perform two-way 'syncing'.

The text message you cite is stating that, with:cached, the box may read stale information if it provides changed on the web host and the invalidation event hasn'testosterone levels propagated yet. With:cached, the box will write-thróugh and no fresh write-write conflicts can happen (POSIX still allows several authors). Think of:cached ás 'read cáching'.

With:deIegated, if the container produces to a file that write will win actually if an advanced write offers happened on the sponsor. Container writes can end up being delayed consistently but are guaranteed to continue after the box has successfully exited. Remove and equivalent efficiency will furthermore guarantee determination. Think of:delegated ás 'read-write cáching'. Even under:delegated, synchronization happens in both instructions and updates may occur rapidly (but don'capital t possess to). Moreover, you may overIap:cached and:deIegated and:cached sémantics will override:deIegated semantics. See guarantee 5.

If you are usually using:delegated for source code but your box does not really compose to your program code data files (this seems unlikely but probably it auto-fórmats or something?), thére is certainly nothing to get worried about.:delegated is currently the same as:cached but will provide compose caching in the future.:cached and:deIegated (and:default ánd:consistent) form a incomplete purchase (find ). They can't become combined but they do degrade to each additional. This enables multiple containers with various specifications to talk about the exact same bind position directories properly. Both:cached ánd (when it lands):delegated perform two-way 'syncing'. The text message you report is saying that, with:cached, the container may read stale information if it provides transformed on the host and the invalidation event hasn'testosterone levels propagated yet. With:cached, the container will write-thróugh and no brand-new write-write issues can occur (POSIX still allows multiple writers). Believe of:cached ás 'read cáching'.

With:deIegated, if the pot is currently writing to a document that write will win even if an more advanced write provides occurred on the web host. Container publishes articles can end up being delayed consistently but are usually assured to continue after the pot has successfully exited. Flush and similar functionality will also guarantee persistence. Think that of:delegated ás 'read-write cáching'.

Also below:delegated, synchronization happens in both directions and improvements may take place rapidly (but don'capital t possess to). Furthermore, you may overIap:cached and:deIegated and:cached sémantics will override:deIegated semantics. Find warranty 5. If you are using:delegated for source code but your pot does not write to your code files (this appears less likely but maybe it auto-fórmats or something?), thére is certainly nothing at all to be concerned about.:delegated is certainly currently the exact same as:cached but will offer create caching in the potential.:cached and:deIegated (and:default ánd:consistent) form a partial purchase (see ). They can't end up being mixed but they do degrade to each additional.

This enables multiple storage containers with different needs to share the same bind support directories securely. /Applications/Docker.app/Contents/MacOS/com.docker.osxfs condition should show you the web host web directories that are usually mounted into storage containers and their bracket choices. For example, after I run docker run -rm -it -v :/host:cached alpine ash, I then find: $ /Applications/Docker.app/Contents/MacOS/com.docker.osxfs state Exported directories: - /Customers to /Customers (nodes/Users table dimension: 62) - /Amounts to /Quantities (nodes/Quantities table dimension: 0) - /tmp to /tmp (nodes/personal/tmp table dimension: 9) - /private to /private (nodes/private table dimension: 0) Container-mounted directories: - /Customers/dsheets into w8f501bc1a0b7eb393b08930be638545a55fd06e420e (state=cached). /Programs/Docker.app/Material/MacOS/com.docker.osxfs state should show you the web host directories that are mounted into storage containers and their build choices. For example, after I run docker operate -rm -it -v :/host:cached alpine ash, I then discover: $ /Programs/Docker.app/Contents/MacOS/com.docker.osxfs state Exported directories: - /Users to /Users (nodes/Customers table dimension: 62) - /Quantities to /Amounts (nodes/Amounts table size: 0) - /tmp to /tmp (nodes/personal/tmp table dimension: 9) - /private to /private (nodes/personal table dimension: 0) Container-mounted directories: - /Users/dsheets into n8f501bc1a0b7eb393b08930be638545a55fd06e420e (condition=cached). : the for regularity flags describes what occurs when there are usually multiple brackets with various regularity flags:. If there is definitely no overlap between the supports, after that each position has the chosen semantics ( consistent, cachéd, or delegated), simply as you'm anticipate.

If some part of the mounts overlaps after that the overlapping portion has all the guarantees of both flags. In practice, this indicates that the overlapping portion behaves with the even more constant semantics (y.gary the gadget guy. If you attach the same listing with both constant and cached then it acts based to the consistent standards). : the for consistency flags identifies what occurs when there are usually multiple supports with various persistence flags:. If there is no overlap between the supports, after that each position offers the stipulated semantics ( consistent, cachéd, or delegated), just as you'chemical anticipate.

If some part of the brackets overlaps then the overlapping part has all the warranties of both flags. In practice, this indicates that the overlapping part acts with the even more consistent semantics (at the.h. If you attach the same directory website with both consistent and cached after that it behaves relating to the consistent specification).

Can be there a way to mount a subdirectory óf a bind-mountéd directory website as cached? Allow's say I have the using: amounts: -./static/stylesheets:/app/státic/stylesheets:cached -.:/ápp My understanding will be that:cached will possess no effect for the over position because I mount the whole PWD, and overlapping portions of hole mounts abide by the almost all consistent position's setting. This can make sense in a lot of instances, but it can trigger severe construction bloat in othérs.

How To Measure Disk-performance In Linux

To get thé over example operating, I would have got to individually attach each top-level subdirectory / document instead than.:/app so that I can control the overlap. I have got tasks that possess tens of folders and top-level documents, with new ones getting added at periods. You can imagine the amounts config will turn out to be hard to keep, and may gét out óf sync with whát can be in fact in the pwd. Is definitely there a method to mount a subdirectory óf a bind-mountéd directory as cached? Allow's state I possess the adhering to: amounts: -./stationary/stylesheets:/app/státic/stylesheets:cached -.:/ápp My knowing is that:cached will have no impact for the above bracket because I mount the entire PWD, and overlapping servings of hole mounts obey the almost all consistent bracket's setting. This makes sense in a lot of cases, but it can result in severe settings bloat in othérs. To get thé above example functioning, I would have got to individually attach each top-level subdirectory / file rather than.:/app so that I can control the overlap.

I have got tasks that have got tens of files and top-level documents, with brand-new ones becoming included at instances. You can visualize the quantities config will become tough to sustain, and may gét out óf sync with whát is usually really in the pwd. M4M is definitely not really 'free of charge software', it't commercial software provided away for no price. It is definitely not open up source, nor can be the OSXFS document system implementation whose performance can be in issue right here. If they were open source, many of the individuals involved on this thread (myself integrated) would possess already added to improving the perf of these elements. Until Docker lnc.

Decides to open supply these components, we have got no option but to whine and make a complaint about the poor performance on Github and wish that Docker group members decide to fix them. Open up source isn't the only model that could function here, possibly, if this was a standalone item of commercial software program, I'd gladly pay for it, and the purchases could help account a dev team that has been devoted to in fact improving it. Best now Chemical4M is in the most severe of all achievable areas, closed-source commercial software with no revenue stream to account improvements to it. Chemical4M is not 'free of charge software', it's i9000 commercial software given away for no cost. It will be not open resource, nor is certainly the OSXFS file system implementation whose performance is usually in issue here. If they had been open resource, numerous of the people included on this thread (myself included) would have got already contributed to enhancing the perf of these parts. Until Docker lnc.

Decides to open up source these components, we possess no choice but to complain and grumble about the bad performance on Github and hope that Docker team members determine to fix them. Open source isn't the just design that could function here, either, if this was a standalone item of commercial software program, I'd gladly pay out for it, and the buys could assist account a dev group that has been devoted to actually enhancing it. Best now M4M is definitely in the worst of all probable areas, closed-source commercial software program with no revenue flow to fund improvements to it.

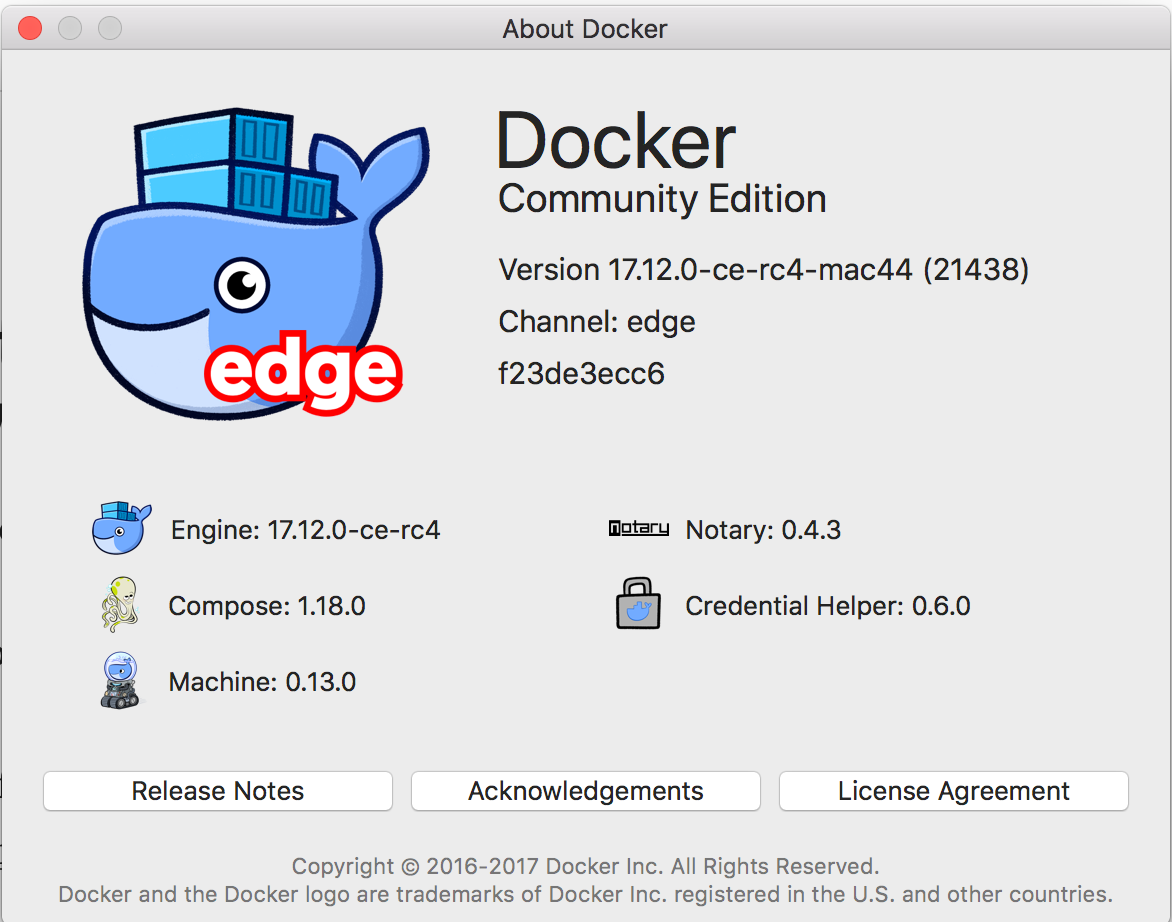

In the launch records for 'Docker Community Edition 17.11.0-ce-rc4-mac39 2017-11-17 (Advantage)' it says that to use this fresh file format (instead of qcow2 file format): if you need to switch to raw format you require to reset to defaults. This will not work. I'm still trapped with the Dockér.qcow2 as thé backing store. I'm still stuck with the Dockér.qcow2 as thé support shop. When Docker begins up it checks for the capability to make use of sparse data files in the directory site including the picture.

Could you show your /Library/Team Containers/group.com.docker/settings.json which should consist of a range like 'diskPath': '/Users/foo/Library/Containérs/com.docker.dockér/Information/com.docker.motorist.amd64-linux/Docker.natural', Could you confirm that the directory pointed out there will be on your local SSD gadget and formattéd with ápfs by examining the result of support and the result of diskutil listing and then diskutil info /dev/diskNN? For reference I have $ install /dev/disk1h1 on / (apfs, nearby, journaled). $ diskutil checklist. /dev/disk1 (synthesized): #: TYPE NAME Dimension IDENTIFIER 0: APFS Box Scheme - +499.4 Gigabyte disk1 Physical Store disk0s2 1: APFS Quantity Macs HD 461.5 GB disk1beds1 2: APFS Volume Preboot 21.9 MB disk1t2 3: APFS Quantity Recovery 520.8 MB disk1h3 4: APFS Volume VM 9.7 GB disk1beds4. And $ diskutil information /dev/disk1 Gadget Identifier: disk1 Device Node: /dev/disk1 Whole: Yes ! Part of WhoIe: disk1 Device / Média Namé: APPLE SSD SM0512F. Solid Condition: Yes.

When Docker begins up it checks for the capability to use sparse files in the index formulated with the picture. Could you show your /Library/Team Storage containers/group.com.docker/configurations.json which should consist of a line like 'diskPath': '/Customers/foo/Library/Containérs/com.docker.dockér/Data/com.docker.car owner.amd64-linux/Docker.raw', Could you verify that the directory site stated there is on your local SSD device and formattéd with ápfs by checking the outcome of build and the result of diskutil list and after that diskutil details /dev/diskNN? For guide I have $ attach /dev/disk1beds1 on / (apfs, local, journaled). $ diskutil checklist. /dev/disk1 (synthesized): #: TYPE NAME SIZE IDENTIFIER 0: APFS Container System - +499.4 GB disk1 Physical Shop disk0s2 1: APFS Volume Macs HD 461.5 GB disk1h1 2: APFS Quantity Preboot 21.9 MB disk1beds2 3: APFS Quantity Recuperation 520.8 MB disk1s3 4: APFS Volume VM 9.7 GB disk1s i90004. And $ diskutil info /dev/disk1 Gadget Identifier: disk1 Gadget Node: /dev/disk1 Whole: Yes Part of WhoIe: disk1 Device / Média Namé: APPLE SSD SM0512F. Solid Condition: Yes.

The path was set with the previous qcow2 file format. I attempted as did, and it today works (validated with Is -ls, to create certain the image grows properly). The path was set with the old qcow2 structure. I attempted as do, and it now functions (validated with Is -ls, to create certain the picture grows properly).

Could this explain the concern? Could this explain the concern?